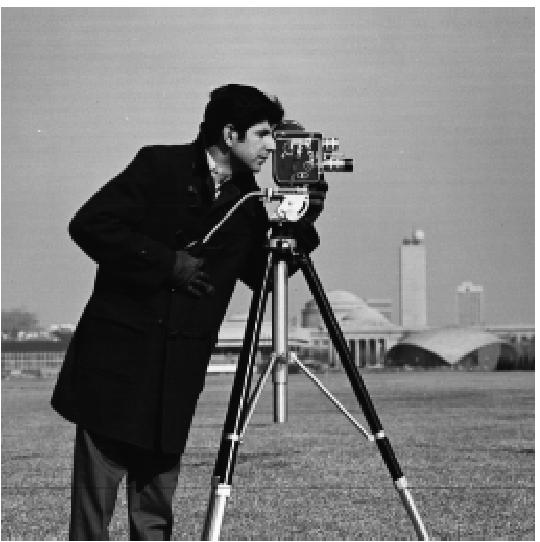

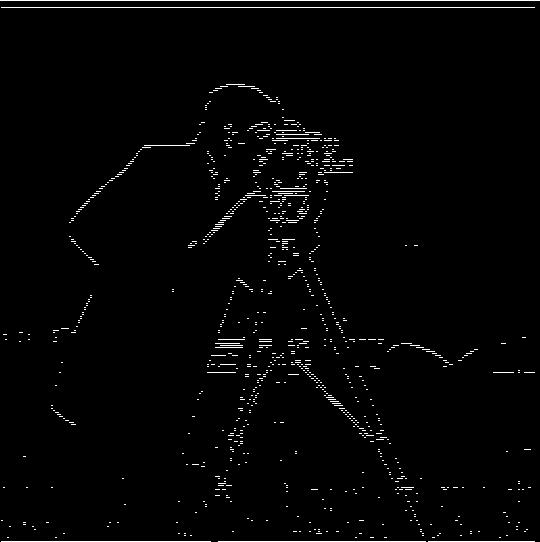

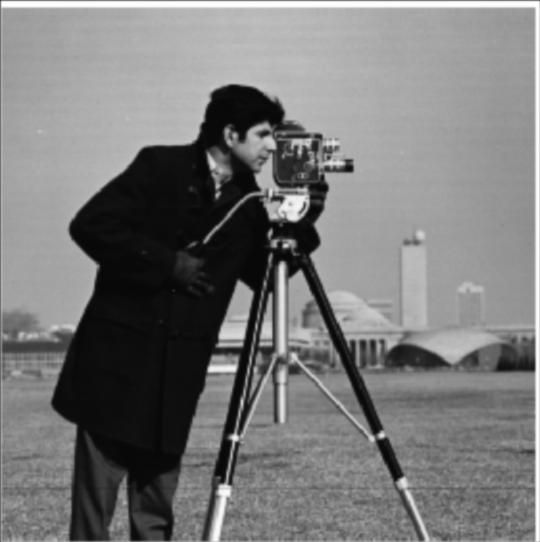

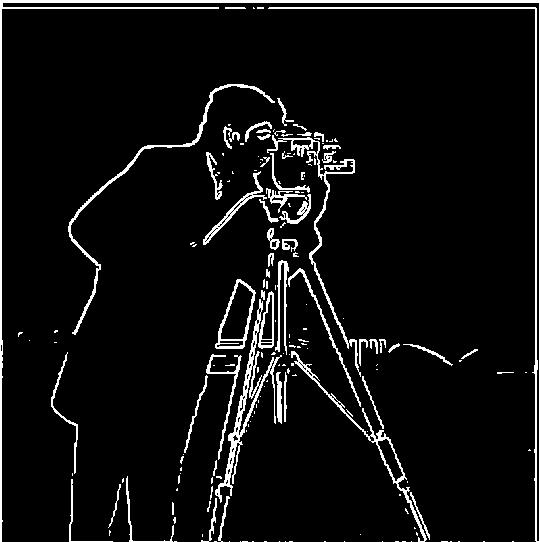

Cameraman Original Image

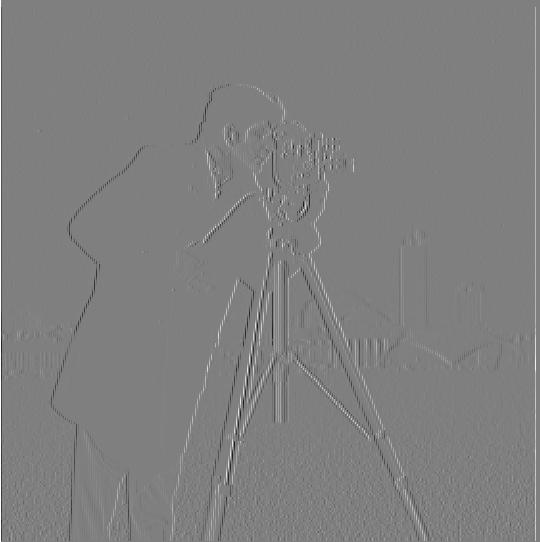

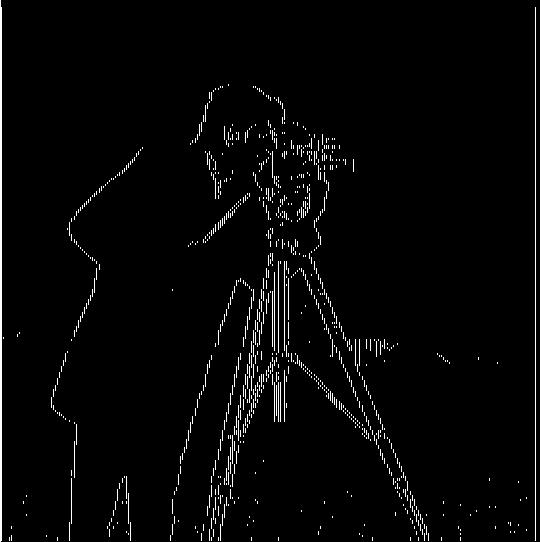

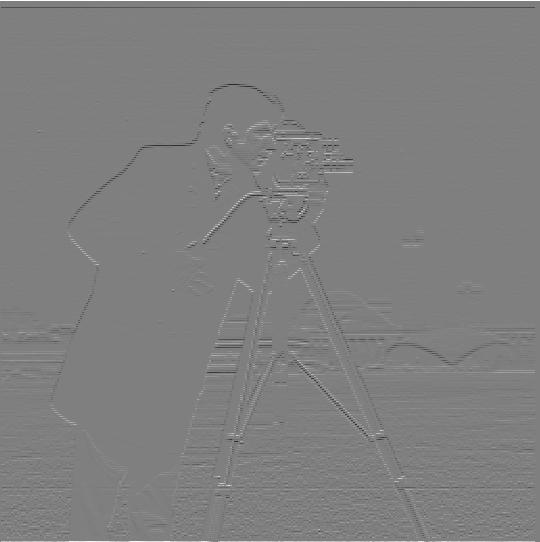

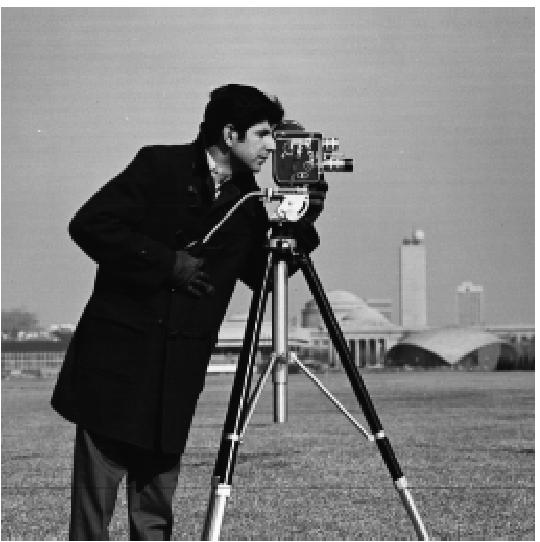

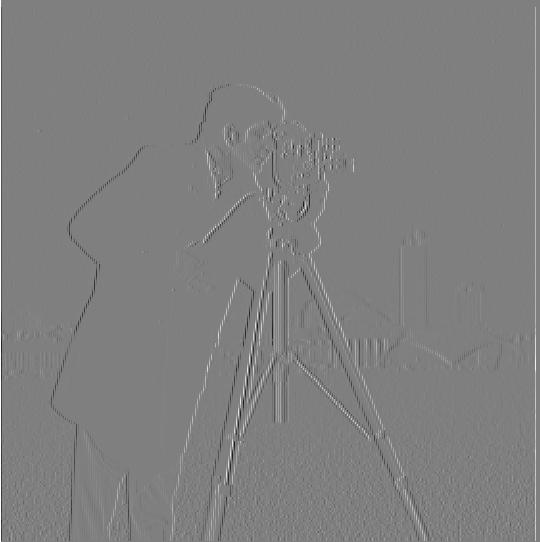

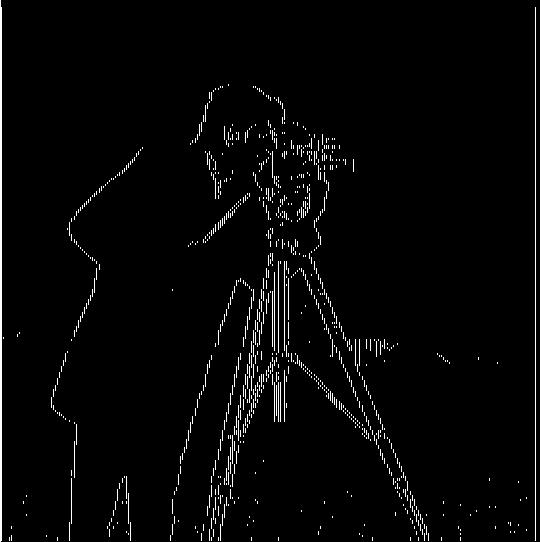

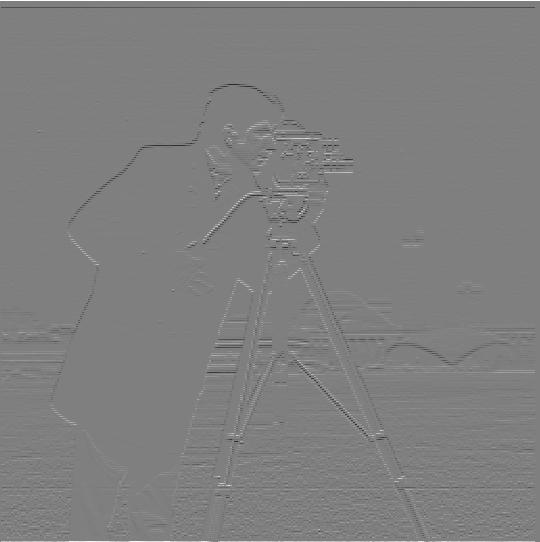

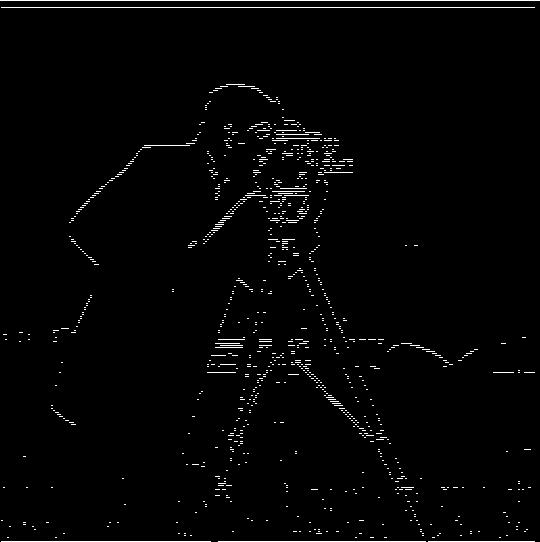

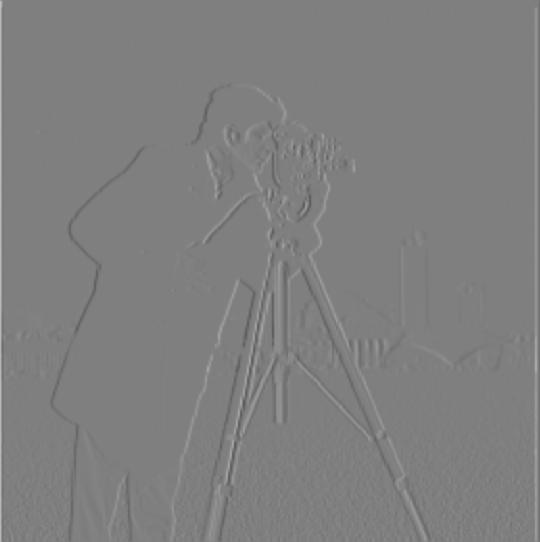

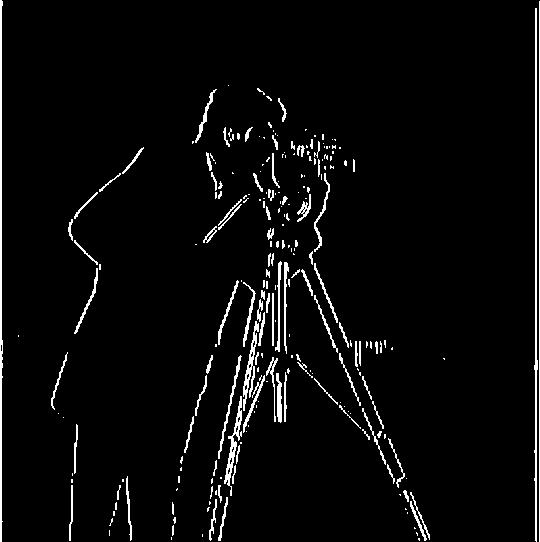

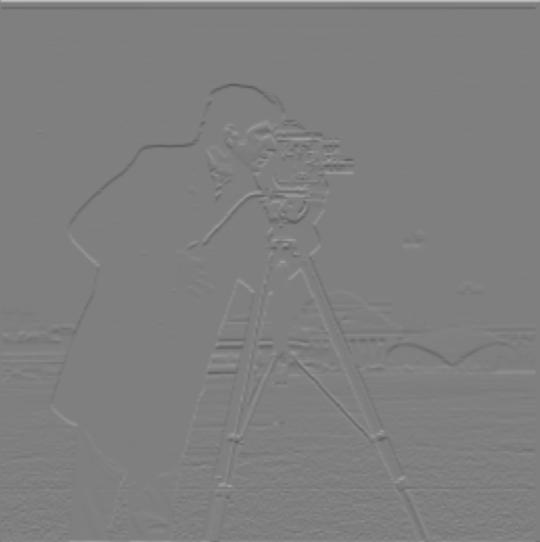

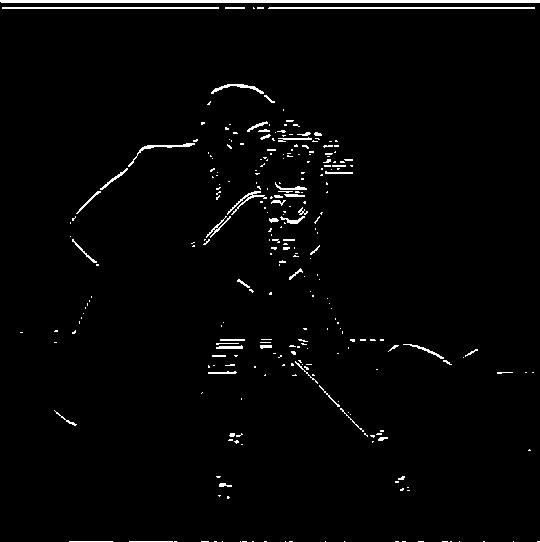

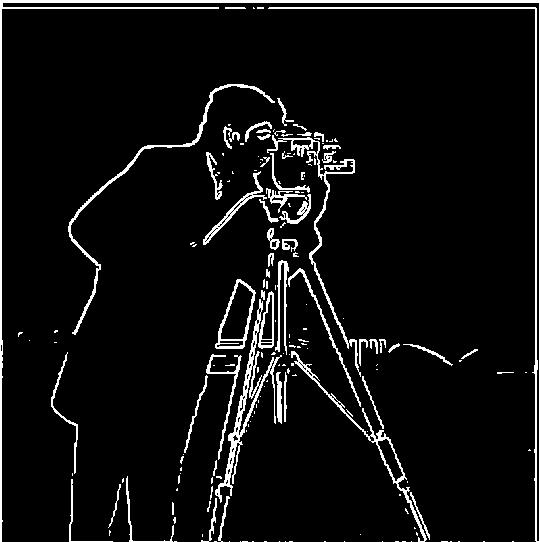

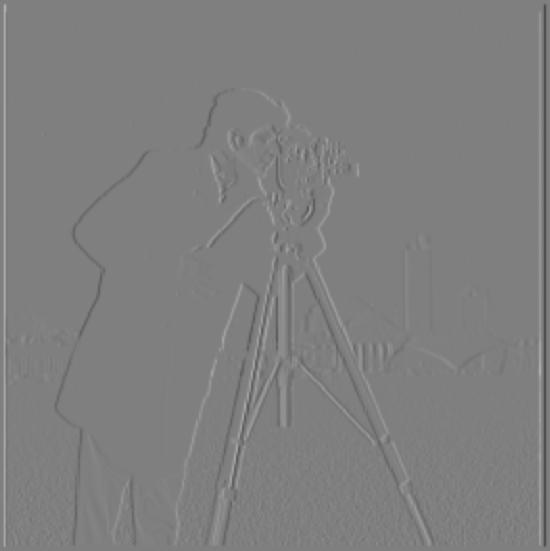

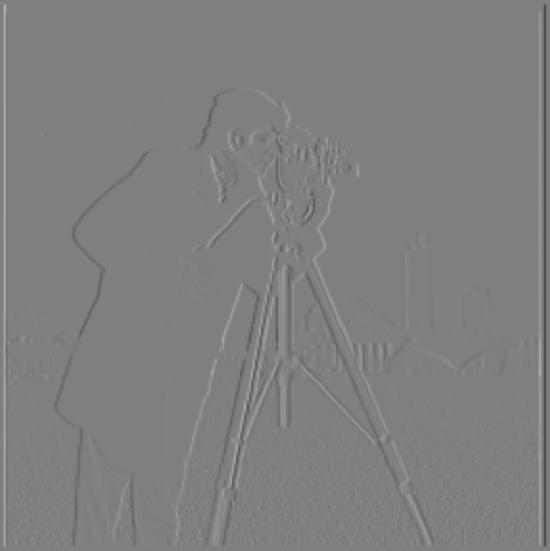

We apply these filters to the cameraman image shown below. Note that we rescale the -1 to 1 possible difference range into 0 to 1 in order to display negative derivative values properly. To calculate the gradient magnitude, we element-wise take the square root of the sum of the square of pixel values for dx and dy. To compute the binary edge image, we use a threshold of 0.2 on the gradient magnitude/absolute value of partial difference.

We apply these filters to the cameraman image shown below. Note that we rescale the -1 to 1 possible difference range into 0 to 1 in order to display negative derivative values properly. To calculate the gradient magnitude, we element-wise take the square root of the sum of the square of pixel values for dx and dy. To compute the binary edge image, we use a threshold of 0.2 on the gradient magnitude/absolute value of partial difference.

Cameraman Original Image

|

||

|---|---|---|

| Raw | Binarized | |

| Dx |  |

|

| Dy |  |

|

| Magnitude |  |

|

Cameraman Blurred

|

||

|---|---|---|

| Raw | Binarized | |

| Dx |  |

|

| Dy |  |

|

| Magnitude |  |

|

| Finite Difference | Finite Difference w/ Gaussian Filtering | |

|---|---|---|

| Binarized Gradient Magnitude |  |

|

| Finite Difference on Blurred | Derivative of Gaussian Filter | |

|---|---|---|

| Dx |  |

|

| Dy |  |

|

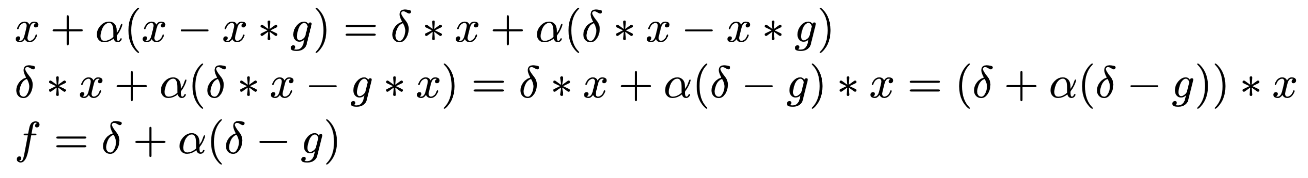

Thus, we calculate the sharpen filter as the sum of a delta and alpha times delta minus our Gaussian. To make sure delta is well defined, we use an odd kernel size, which is the odd number closest to 6 times the provided sigma value. We also adjust the size of our images accordingly to be around 1000 in the largest direction, otherwise the process is a bit slow. It is very important to clip the pixel values to avoid weird overflow and underflow errors.

Thus, we calculate the sharpen filter as the sum of a delta and alpha times delta minus our Gaussian. To make sure delta is well defined, we use an odd kernel size, which is the odd number closest to 6 times the provided sigma value. We also adjust the size of our images accordingly to be around 1000 in the largest direction, otherwise the process is a bit slow. It is very important to clip the pixel values to avoid weird overflow and underflow errors.

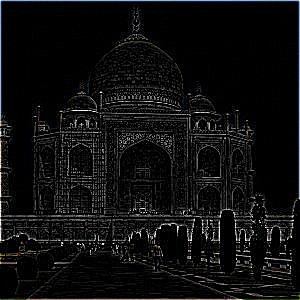

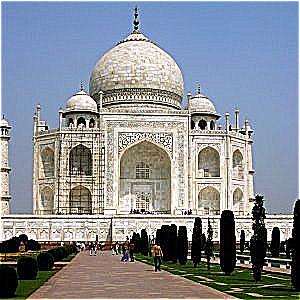

Taj Mahal Sharpening Details

|

||

|---|---|---|

| Original Image | After Sharpening | |

| Taj Mahal [alpha = 4, sigma = 1] |

|

|

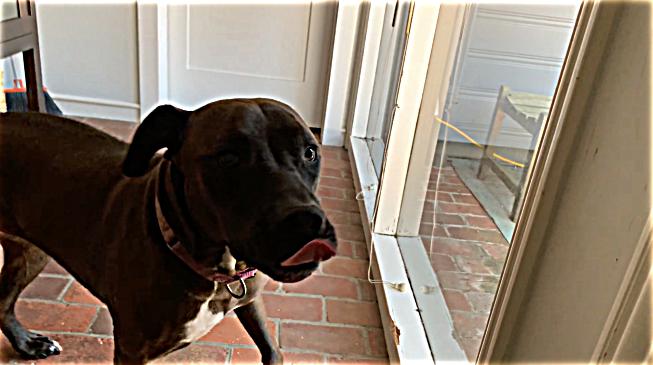

| Bear (My Dog) [alpha = 2, sigma = 3] |

|

|

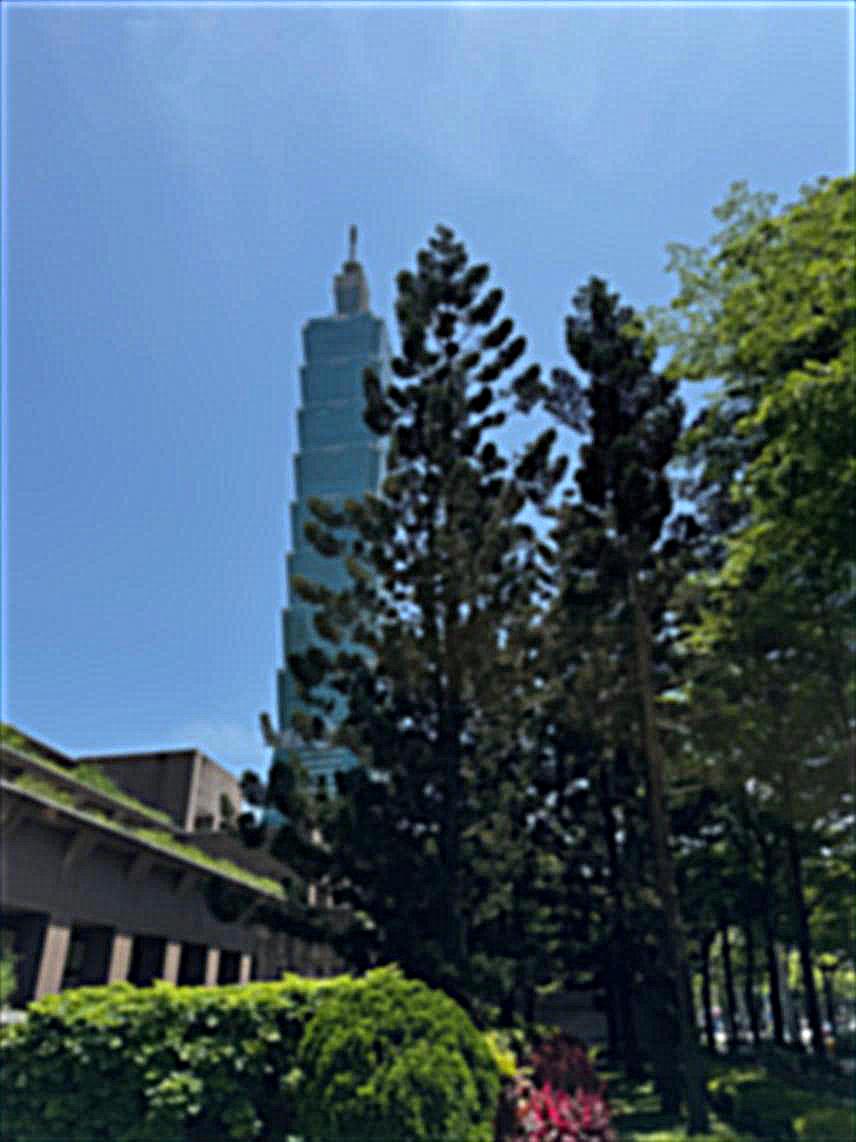

| Banqiao District, New Taipei (板桥区,新北市) [alpha = 2, sigma = 5] |

|

|

Taipei 101 Original Sharp Image

|

||

|---|---|---|

| Original (Blurred) Image | After Sharpening | |

| Taipei 101 [alpha = 3, sigma = 3] |

|

|

| Derek (Low) | Nutmeg (High) |

|---|---|

|

|

Derek + Nutmeg Hybrid Image

|

|

| Happy (Low) | Sad (High) |

|---|---|

|

|

Happiness + Sadness Hybrid Image

|

|

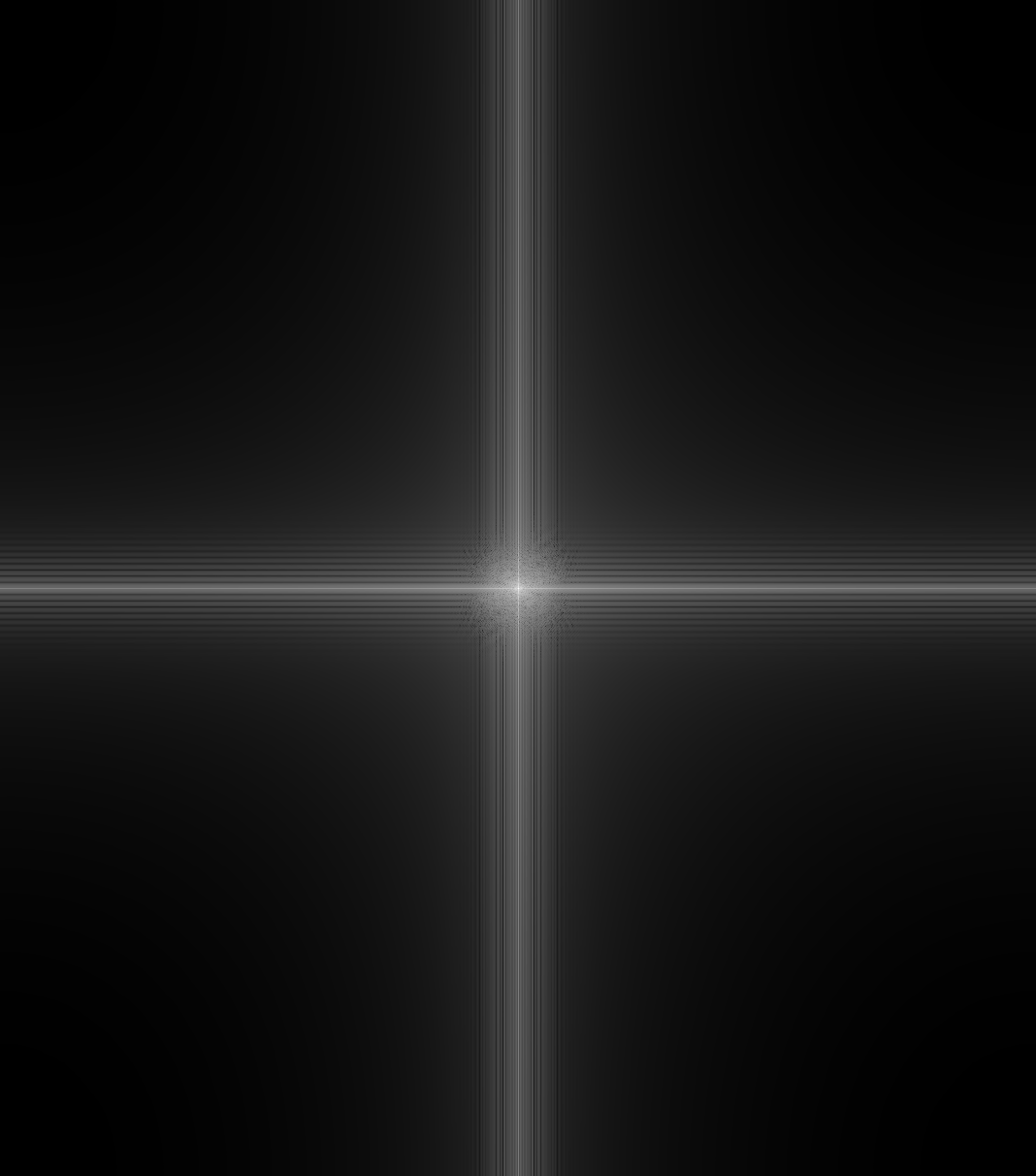

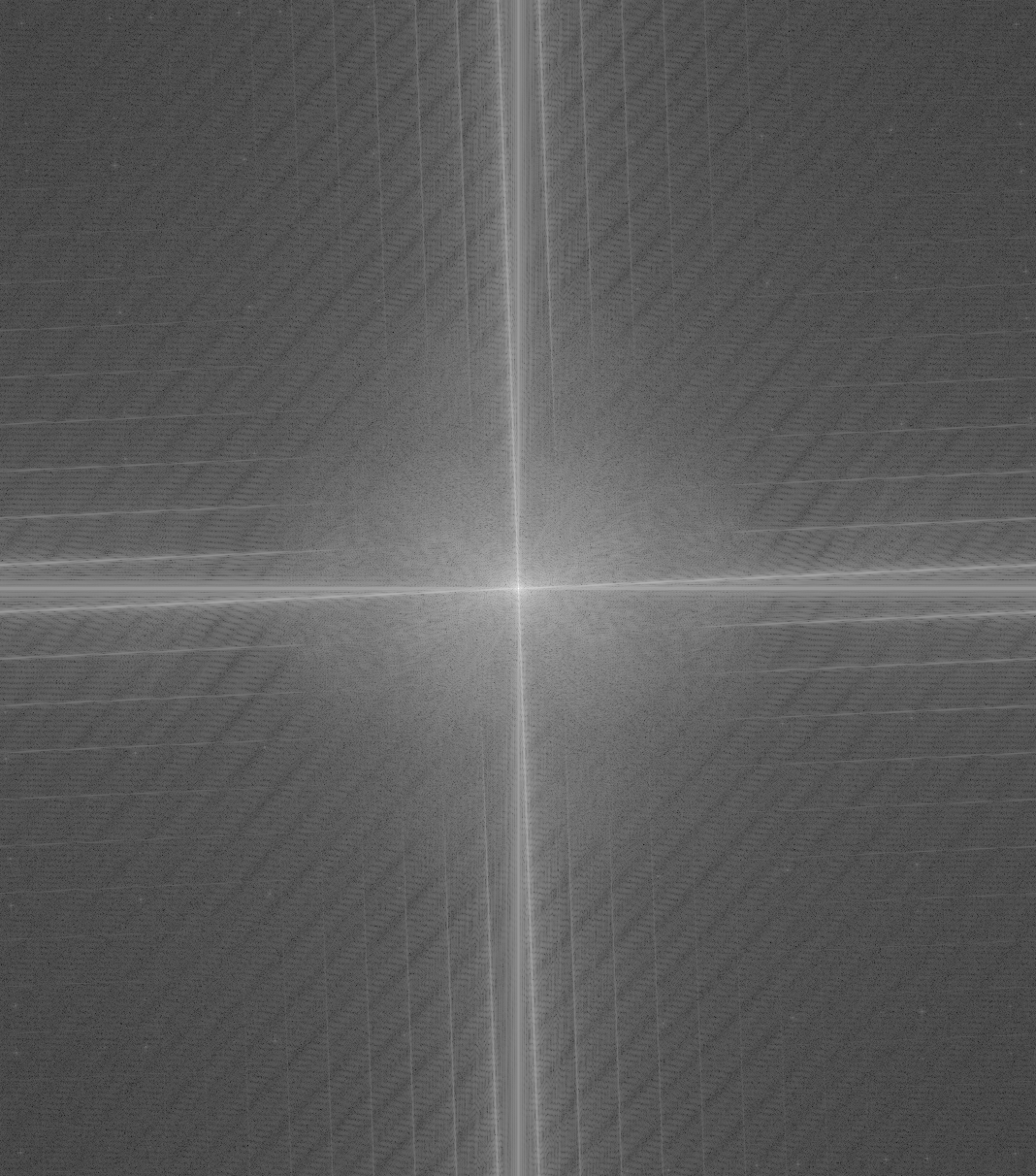

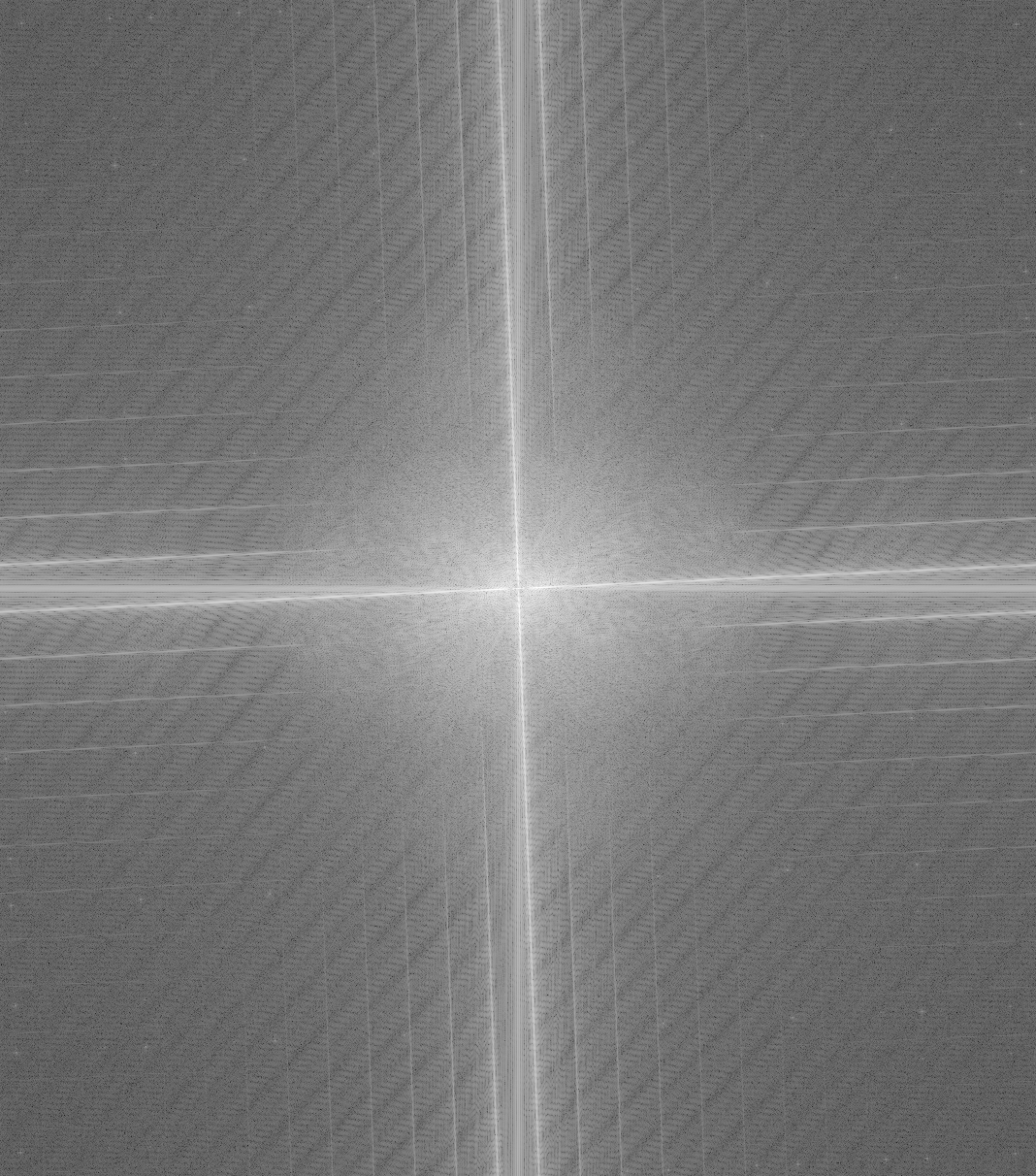

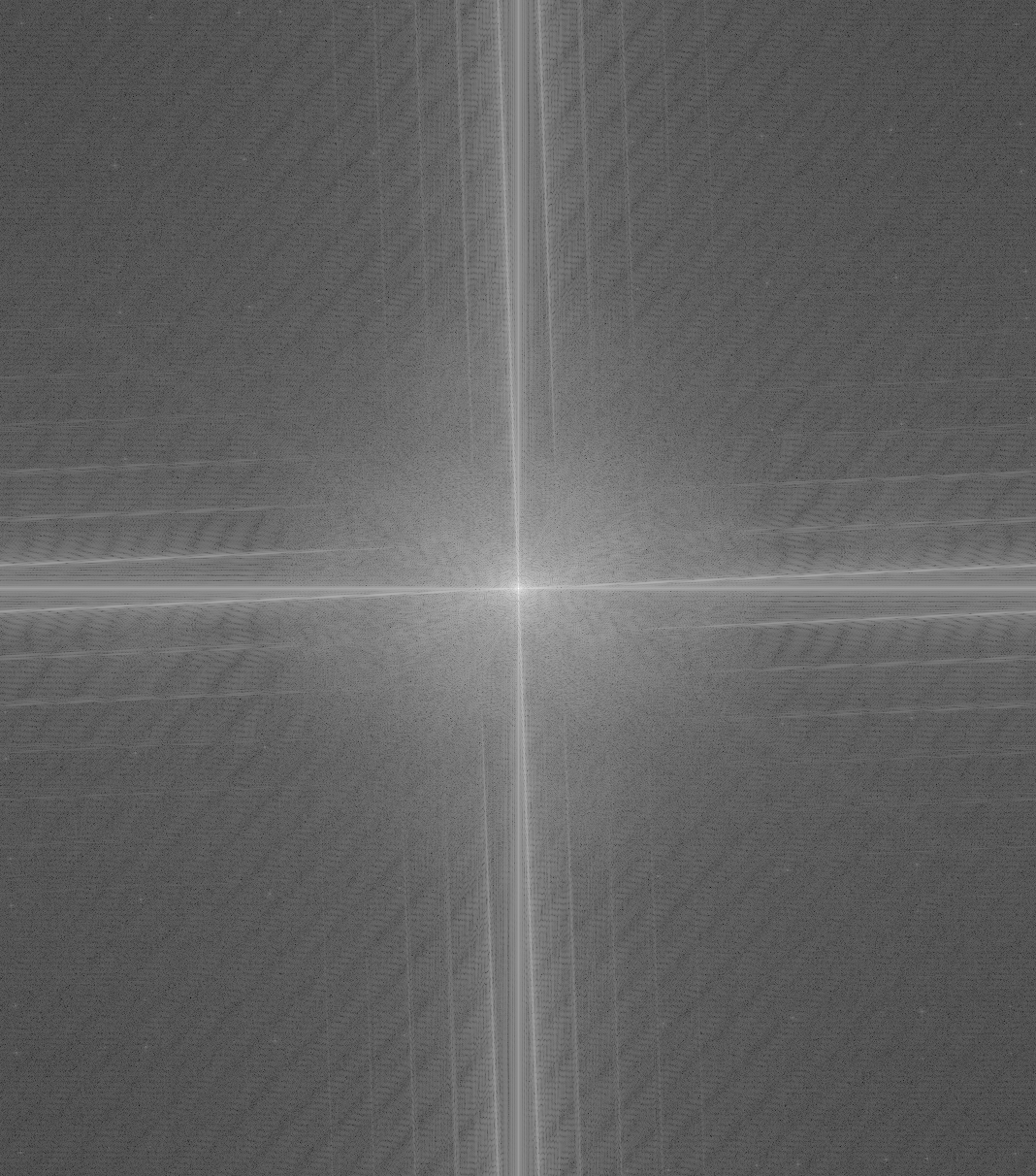

| Original Image FFT | FFT After Filtering | |

|---|---|---|

| Low Frequency (Happy) |  |

|

| High Frequency (Sad) |  |

|

Hybrid Image Plot

|

||

| Cat (Low) | Pug (High) |

|---|---|

|

|

Cat and Pug Hybrid Image

|

|

| Blooming Flower (Low) | Dying Flower (High) |

|---|---|

|

|

Blooming and Dying Flower Hybrid Image

|

|

| Intact Windshield (Low) | Broken Windshield (High) |

|---|---|

|

|

Intact and Broken Windshield Hybrid Image

|

|

| Orange | Apple | |

|---|---|---|

| Level 0 |  |

|

| Level 2 |  |

|

| Level 4 |  |

|

| Level 6 |  |

|

| Dog | Smiling Person | Mask |

|---|---|---|

|

|

|

| Dog | Smiling Person | Blended At Level | |

|---|---|---|---|

| Level 0 |  |

|

|

| Level 2 |  |

|

|

| Level 4 |  |

|

|

| Level 6 |  |

|

|

| Photoshop (No Blending) | Multiresolution Blending |

|---|---|

|

|

| S24 Ultra | iPhone 15 Pro Max |

|---|---|

|

|

S24 Ultra and iPhone 15 Pro Max Fused Image

|

|

| Apple | Orange |

|---|---|

|

|

Apple and Orange Fused Image

|

|